Hailo, a Tel Aviv-based startup best known for its high-performance AI chips, today announced the launch of its M.2 and Mini PCIe high-AI acceleration modules. Based around its Hailo-8 chip, these new models are meant to be used in edge devices for anything from smart city and smart home solutions to industrial applications.

Today’s announcement comes about half a year after the company announced a $60 million Series B funding round. At the time, Hailo said it was raising those new funds to roll out its new AI chips, and with today’s announcement, it’s making good on this promise. In total, the company has now raised $88 million.

“Manufacturers across industries understand how crucial it is to integrate AI capabilities into their edge devices. Simply put, solutions without AI can no longer compete,” said Orr Danon, CEO of Hailo, in today’s announcement. “Our new Hailo-8 M.2 and Mini PCIe modules will empower companies worldwide to create new powerful, cost-efficient, innovative AI-based products with a short time-to-market – while staying within the systems’ thermal constraints. The high efficiency and top performance of Hailo’s modules are a true gamechanger for the edge market.”

Developers can still use frameworks like TensorFlow and ONNX to build their models, and Hailo’s Dataflow compiler will handle the rest. One thing that makes Hailo’s chips different is its architecture, which allows it to automatically adapt to the needs of the neural network running on it.

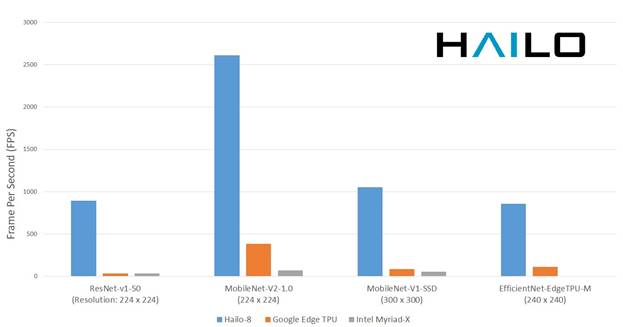

Hailo is not shy about comparing its solution to that of heavyweights like Intel, Google and Nvidia. With 26 tera-operations per second (TOPS) and power efficiency of 3 TOPS/W, the company claims its edge modules can analyze significantly more frames per second than Intel’s Myriad-X and Google’s Edge TPU modules — all while also being far more energy efficient.

The company is already working with Foxconn to integrate the M.2 module into its “BOXiedge” edge computing platform. Because it’s just a standard M.2 module, Foxconn was able to integrate it without any rework. Using the Hailo-8 M.2 solution, this edge computing server can process 20 camera streams at the same time.

“Hailo’s M.2 and Mini PCIe modules, together with the high-performance Hailo-8 AI chip, will allow many rapidly evolving industries to adopt advanced technologies in a very short time, ushering in a new generation of high performance, low power, and smarter AI-based solutions,” said Dr. Gene Liu, VP of Semiconductor Subgroup at Foxconn Technology Group.

from TechCrunch https://ift.tt/30kLHMG

via IFTTT

Comments

Post a Comment