Research papers come out far too rapidly for anyone to read them all, especially in the field of machine learning, which now affects (and produces papers in) practically every industry and company. This column aims to collect the most relevant recent discoveries and papers — particularly in but not limited to artificial intelligence — and explain why they matter.

The topics in this week’s Deep Science column are a real grab bag that range from planetary science to whale tracking. There are also some interesting insights from tracking how social media is used and some work that attempts to shift computer vision systems closer to human perception (good luck with that).

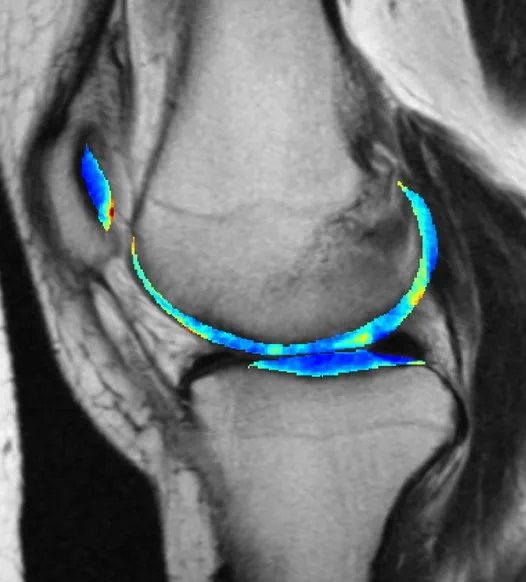

ML model detects arthritis early

One of machine learning’s most reliable use cases is training a model on a target pattern, say a particular shape or radio signal, and setting it loose on a huge body of noisy data to find possible hits that humans might struggle to perceive. This has proven useful in the medical field, where early indications of serious conditions can be spotted with enough confidence to recommend further testing.

This arthritis detection model looks at X-rays, same as doctors who do that kind of work. But by the time it’s visible to human perception, the damage is already done. A long-running project tracking thousands of people for seven years made for a great training set, making the nearly imperceptible early signs of osteoarthritis visible to the AI model, which predicted it with 78% accuracy three years out.

The bad news is that knowing early doesn’t necessarily mean it can be avoided, as there’s no effective treatment. But that knowledge can be put to other uses — for example, much more effective testing of potential treatments. “Instead of recruiting 10,000 people and following them for 10 years, we can just enroll 50 people who we know are going to be getting osteoarthritis … Then we can give them the experimental drug and see whether it stops the disease from developing,” said co-author Kenneth Urish. The study appeared in PNAS.

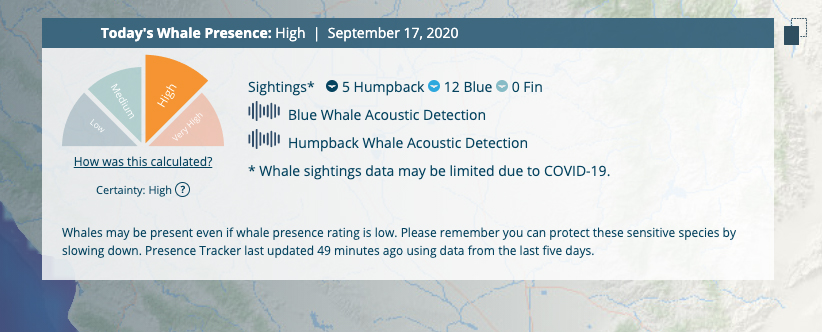

Using acoustic monitoring to preemptively save the whales

It’s amazing to think that ships still collide with and kill large whales on a regular basis, but it’s true. Voluntary speed reductions haven’t been much help, but a smart, multisource system called Whale Safe is being put in play in the Santa Barbara channel that could hopefully give everyone a better idea of where the creatures are in real-time.

The system uses underwater acoustic monitoring, near-real-time forecasting of likely feeding areas, actual sightings and a dash of machine learning (to identify whale calls quickly) to produce a prediction for whale presence along a given course. Large container ships can then make small adjustments well-ahead of time instead of trying to avoid a pod at the last minute.

“Predictive models like this give us a clue for what lies ahead, much like a daily weather forecast,” said Briana Abrahms, who led the effort from the University of Washington. “We’re harnessing the best and most current data to understand what habitats whales use in the ocean, and therefore where whales are most likely to be as their habitats shift on a daily basis.”

Incidentally, Salesforce founder Marc Benioff and his wife Lynne helped establish the UC Santa Barbara center that made this possible.

from TechCrunch https://ift.tt/2HEufw1

via IFTTT

Comments

Post a Comment