Apple has acquired Akonia Holographics, a Denver-based startup that manufactures augmented reality waveguide lenses. The acquisition was confirmed by Apple to Reuters who first reported the news.

An Apple spokesperson gave TechCrunch the company’s standard statement, “Apple buys smaller technology companies from time to time, and we generally don’t discuss our purpose or plans.”

This acquisition offers the clearest confirmation yet from Apple that it is investing resources into technologies that support the development of a lightweight augmented reality headset. There have been a number of reports over the years that Apple is planning to release consumer AR glasses within the next few years.

In late 2017, we reported that Apple had acquired Vrvana, a mixed-reality headset company with a device that offered users pass-through augmented reality experiences on a conventional opaque display. This latest acquisition seems to offer a much clearer guide to where Apple’s consumer ambitions may take it for a head-worn augmented reality device.

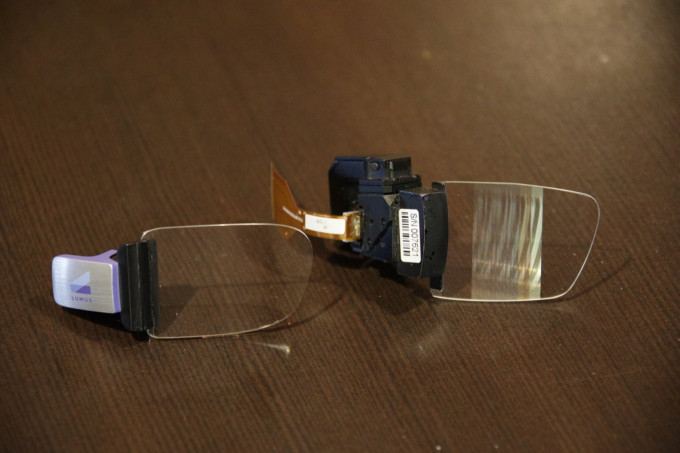

Waveguide displays have become the de facto optic technology for augmented reality headsets. They come in a few different flavors but all of them essentially involve an image being beamed into the side of a piece of glass and bouncing between etchings (or other irregularities) in a lens and eventually beaming that image to the user’s eyes. Waveguide lenses are currently used in AR headsets sold by Magic Leap and Microsoft, among many others.

A reflective waveguide display built by Lumus.

They’re popular because they allow for thin, largely transparent designs though they also often have issues with color reproduction and the displays can only become so large before the images grow distorted. Akonia’s marketing materials claim for their “HoloMirror” solution says it can “display vibrant, full-color, wide field-of-view images.”

The startup raised $11.6 million in funding according to Crunchbase.

While many of Apple’s largest technology competitors have already experimented with AR headsets, Apple has directed the majority of its early consumer-facing efforts to phone-based AR technologies that track the geometry of spaces and can “project” digital objects onto surfaces.

Apple ARKit

The most unclear question regarding Apple’s rumored work on its AR glasses is whether the company is looking to ship a higher-powered device akin to Magic Leap that would track a user’s environment and be built upon Apple’s interactive ARKit tech, or whether it’s first release will be more conservative and approach AR glasses as more of a head-worn Apple Watch that presents a user’s notifications and enables light interactions.

Moving forward with waveguide displays would certainly leave both options open for the company, though given the small window that even today’s widest field-of-view waveguides have, I expect that Apple may opt for the latter pending a big tech breakthrough or a heavily delayed release.

from TechCrunch https://ift.tt/2okEzwj

via IFTTT

Comments

Post a Comment