Farming sustainably and efficiently has gone from a big tractor problem to a big data problem over the last few decades, and startup EarthOptics believes the next frontier of precision agriculture lies deep in the soil. Using high-tech imaging techniques, the company claims to map the physical and chemical composition of fields faster, better, and more cheaply than traditional techniques, and has raised $10M to scale its solution.

“Most of the ways we monitor soil haven’t changed in 50 years,” EarthOptics founder and CEO Lars Dyrud told TechCrunch. “There’s been a tremendous amount of progress around precision data and using modern data methods in agriculture – but a lot of that has focused on the plants and in-season activity — there’s been comparatively little investment in soil.”

While you might think it’s obvious to look deeper into the stuff the plants are growing from, the simple fact is it’s difficult to do. Aerial and satellite imagery and IoT-infused sensors for things like moisture and nitrogen have made surface-level data for fields far richer, but past the first foot or so things get tricky.

Different parts of a field may have very different levels of physical characteristics like soil compaction, which can greatly affect crop outcomes, and chemical characteristics like dissolved nutrients and the microbiome. The best way to check these things, however, involves “putting a really expensive stick in the ground,” said Dyrud. The lab results from these samples affects the decision of which parts of a field need to be tilled and fertilized.

It’s still important, so farms get it done, but having soil sampled every few acres once or twice a year adds up fast when you have 10,000 acres to keep track of. So many just till and fertilize everything for lack of data, sinking a lot of money (Dyrud estimated the U.S. does about $1B in unnecessary tilling) into processes that might have no benefit and in fact might be harmful — it can release tons of carbon that was safely sequestered underground.

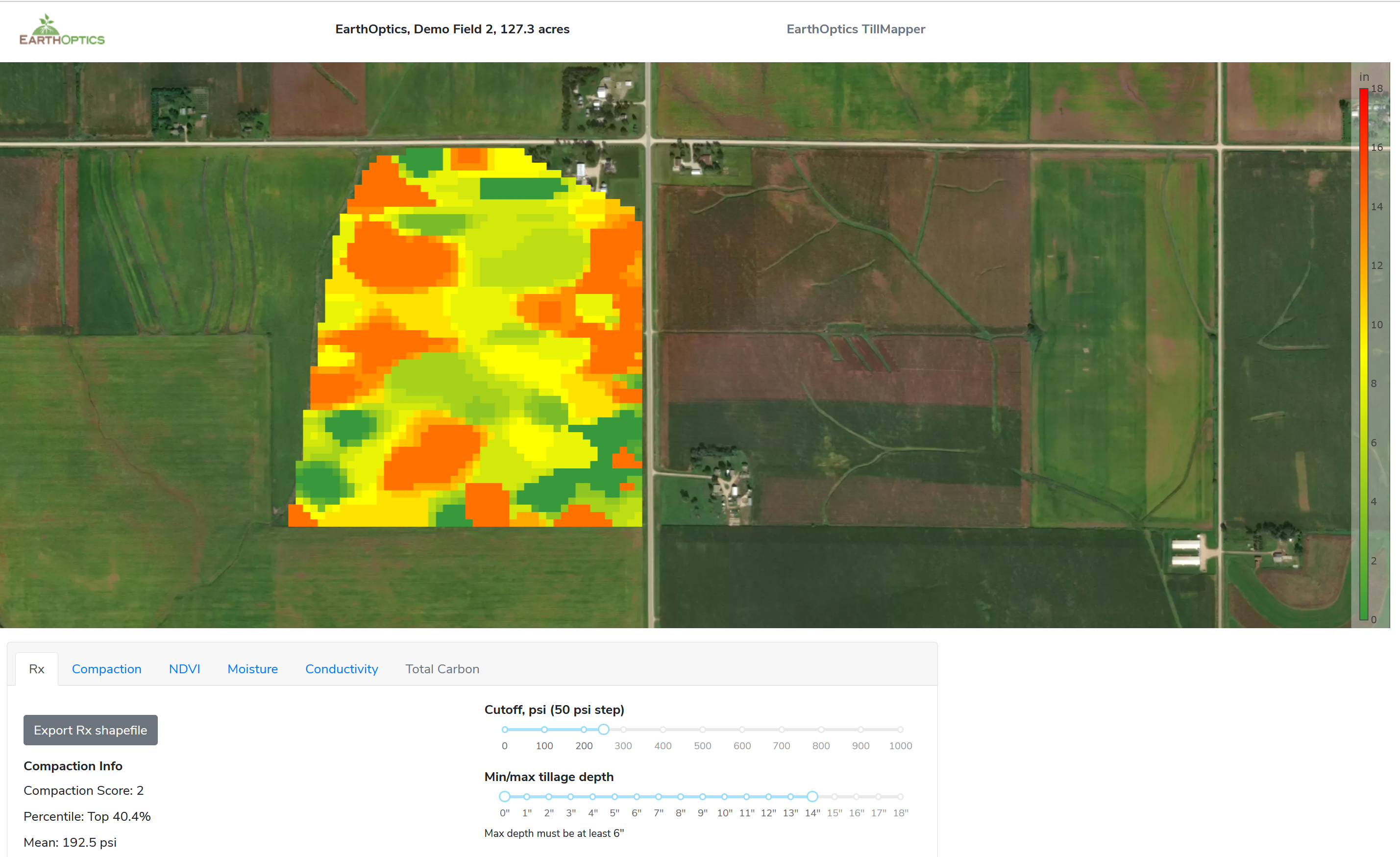

EarthOptics aims to make the data collection process better essentially by minimizing the “expensive stick” part. It has built an imaging suite that relies on ground penetrating radar and electromagnetic induction to produce a deep map of the soil that’s easier, cheaper, and more precise than extrapolating acres of data from a single sample.

Machine learning is at the heart of the company’s pair of tools, GroundOwl and C-Mapper (C as in carbon). The team trained a model that reconciles the no-contact data with traditional samples taken at a much lower rate, learning to predict soil characteristics accurately at level of precision far beyond what has traditionally been possible. The imaging hardware can be mounted on ordinary tractors or trucks, and pulls in readings every few feet. Physical sampling still happens, but dozens rather than hundreds of times.

With today’s methods, you might divide your thousands of acres into 50-acre chunks: this one needs more nitrogen, this one needs tilling, this one needs this or that treatment. EarthOptics brings that down to the scale of meters, and the data can be fed directly into roboticized field machinery like a variable depth smart tiller.

Drive it along the fields and it goes only as deep as it needs to. Of course not everyone has a state of the art equipment, so the data can also be put out as a more ordinary map telling the driver in a more general sense when to till or perform other tasks.

If this approach takes off, it could mean major savings for farmers looking to tighten belts, or improved productivity per acre and dollar for those looking to scale up. And ultimately the goal is to enable automated and robotic farming as well. That transition is in an early stage as equipment and practices get hammered out, but one thing they will all need is good data.

Dyrud said he hopes to see the EarthOptics sensor suite on robotic tractors, tillers, and other farm equipment, but that their product is very much the data and the machine learning model they’ve trained up with tens of thousands of ground truth measurements.

The $10.3M A round was led by Leaps by Bayer (the conglomerate’s impact arm), with participation from S2G Ventures, FHB Ventures, Middleland Capital’s VTC Ventures and Route 66 Ventures. The plan for the money is to scale up the two existing products and get to work on the next one: moisture mapping, obviously a major consideration for any farm.

from TechCrunch https://ift.tt/2Z8rtYw

via IFTTT

Comments

Post a Comment