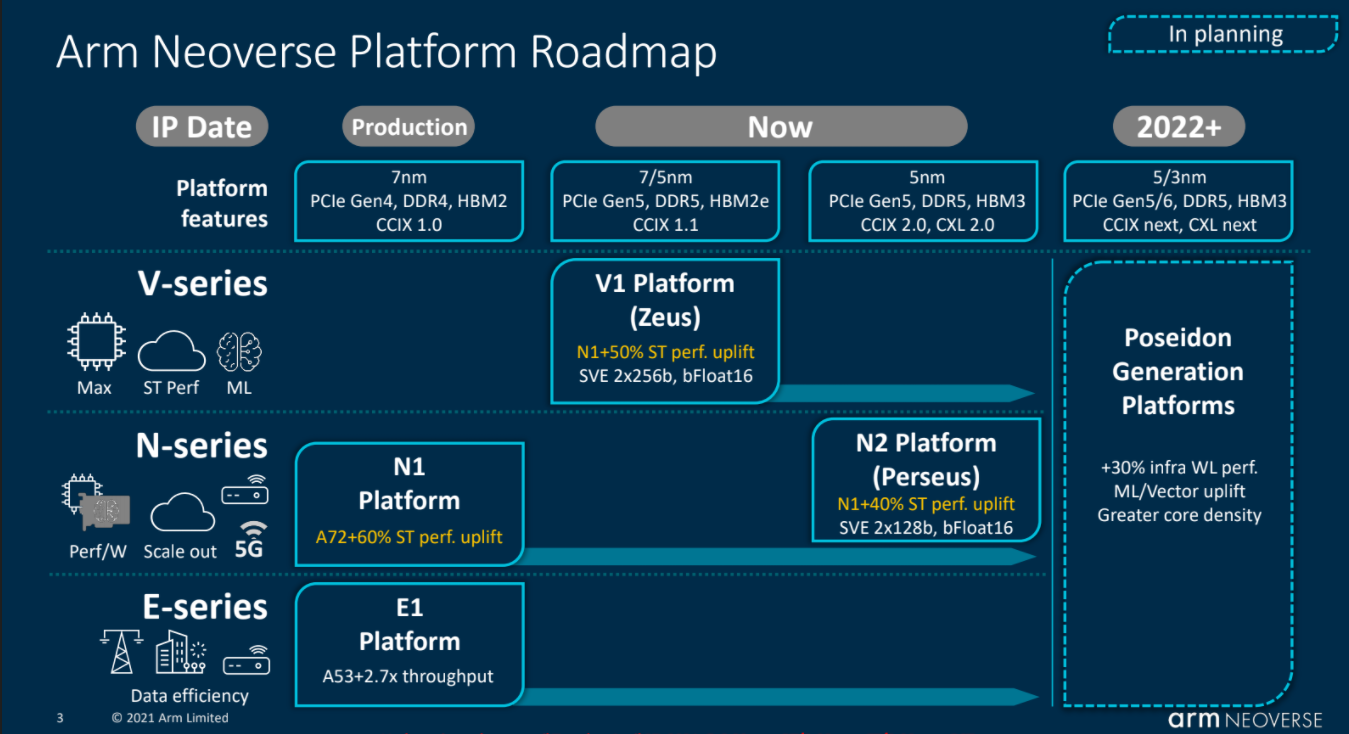

Arm today announced the launch of two new platforms, Arm Neoverse V1 and Neoverse N2, as well as a new mesh interconnect for them. As you can tell from the name, V1 is a completely new product and maybe the best example yet of Arm’s ambitions in the data center, high-performance computing and machine learning space. N2 is Arm’s next-generation general compute platform that is meant to span use cases from hyperscale clouds to SmartNICs and running edge workloads. It’s also the first design based on the company’s new Armv9 architecture.

Not too long ago, high-performance computing was dominated by a small number of players, but the Arm ecosystem has scored its fair share of wins here recently, with supercomputers in South Korea, India and France betting on it. The promise of V1 is that it will vastly outperform the older N1 platform, with a 2x gain in floating-point performance, for example, and a 4x gain in machine learning performance.

“The V1 is about how much performance can we bring — and that was the goal,” Chris Bergey, SVP and GM of Arm’s Infrastructure Line of Business, told me. He also noted that the V1 is Arm’s widest architecture yet. He noted that while V1 wasn’t specifically built for the HPC market, it was definitely a target market. And while the current Neoverse V1 platform isn’t based on the new Armv9 architecture yet, the next generation will be.

N2, on the other hand, is all about getting the most performance per watt, Bergey stressed. “This is really about staying in that same performance-per-watt-type envelope that we have within N1 but bringing more performance,” he said. In Arm’s testing, NGINX saw a 1.3x performance increase versus the previous generation, for example.

In many ways, today’s release is also a chance for Arm to highlight its recent customer wins. AWS Graviton2 is obviously doing quite well, but Oracle is also betting on Ampere’s Arm-based Altra CPUs for its cloud infrastructure.

“We believe Arm is going to be everywhere — from edge to the cloud. We are seeing N1-based processors deliver consistent performance, scalability and security that customers want from Cloud infrastructure,” said Bev Crair, senior VP, Oracle Cloud Infrastructure Compute. “Partnering with Ampere Computing and leading ISVs, Oracle is making Arm server-side development a first-class, easy and cost-effective solution.”

Meanwhile, Alibaba Cloud and Tencent are both investing in Arm-based hardware for their cloud services as well, while Marvell will use the Neoverse V2 architecture for its OCTEON networking solutions.

from TechCrunch https://ift.tt/2R3elji

via IFTTT

Comments

Post a Comment