Google announced this morning a new set of developer policies aimed at providing additional protections for children and families seeking out kid-friendly apps on Google Play. The new policies require that developers ensure their apps are meeting all the necessary policy and regulatory requirements for apps that target children in terms of their content, ads, and how they handle personally identifiable information.

For starters, developers are being asked to consider whether children are a part of their target audience — and, if they’re not, developers must ensure their app doesn’t unintentionally appeal to them. Google says it will now also double-check an app’s marketing to confirm this is the case and ask for changes, as needed.

Apps that do target children have to meet the policy requirements concerning content and handling of personally identifiable information. This shouldn’t be new to developers playing by the rules, as Google has had policies around “kid-safe” apps for years as part of its “Designed for Families” program, and countries have their own regulations to follow when it comes to collecting children’s data.

In addition, developers whose apps are targeting children must only serve ads from an ads network that has certified compliance with Google’s families policies.

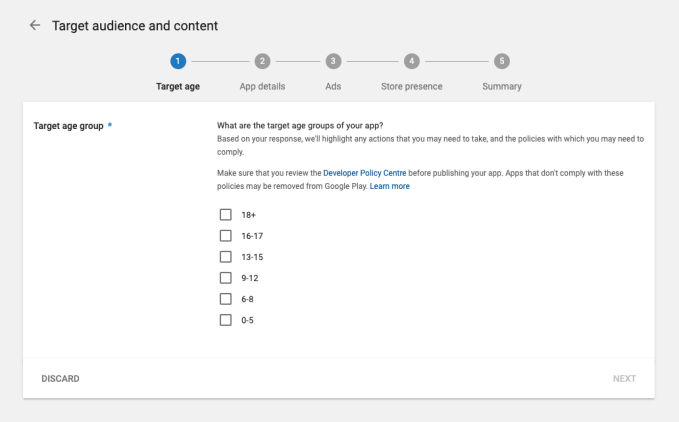

To enforce these policies at scale, Google is now requiring all developers to complete the new target audience and content section of the Google Play Console. Here, they will have to specify more details about their app. If they say that children are targeted, they’ll be directed to the appropriate policies.

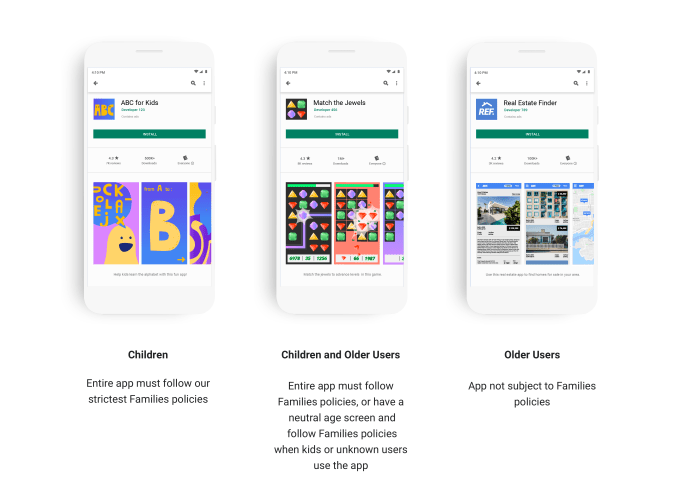

Google will use this information, alongside its review of the app’s marketing materials, in order to categorize apps and apply policies across three target groups: children, children and older users, and older users. (And because the definition of “children” may vary by country, developers will need to determine what age-based restrictions apply in the countries where their app is listed.)

Developers have to comply with the process of filling out the information on Google Play and come into compliance with the updated policies by September 1, 2019.

The company says it’s committed to providing “a safe, positive environment” for kids and families, which is why it’s announcing these changes.

However, the changes are more likely inspired by an FTC complaint filed in December, in which a coalition of 22 consumer and public health advocacy groups, led by Campaign for a Commercial-Free Childhood (CCFC) and Center for Digital Democracy (CDD), asked for an investigation of kids’ apps on Google Play.

The organizations claimed that Google was not verifying apps and games featured in the Family section of Google Play for compliance with U.S. children’s privacy law COPPA.

They also said many so-called “kids” apps exhibited bad behaviors — like showing ads that are difficult to exit or showing those that require viewing in order to continue the current game. Some apps pressured kids into making in-app purchases, and others were found serving ads for alcohol and gambling. And others, still, were found to model harmful behavior or contain graphic, sexualized images, the groups warned regulators.

The time when violations like these can slip through the cracks is long past, thanks to increased regulatory oversight across the online industry by way of laws like the EU’s GDPR, which focuses on data protection and privacy. The FTC is also more keen to act, as needed — it even recently doled out a record fine for TikTok for violating COPPA.

The target audience and content section are live today in the Google Play Console, along with documentation on the new policies, a developer guide, and online training. In addition, Google says it has also increased its staffing and improved its communications for the Google Play app review and appeals processes in order to help developers get timely decisions and understand any changes they’re directed to make.

from TechCrunch https://tcrn.ch/2ERZWhh

via IFTTT

No comments:

Post a Comment