In its latest monitoring report of a voluntary Code of Conduct on illegal hate speech, which platforms including Facebook, Twitter and YouTube signed up to in Europe back in 2016, the European Commission has said progress is being made on speeding up takedowns but tech firms are still lagging when it comes to providing feedback and transparency around their decisions.

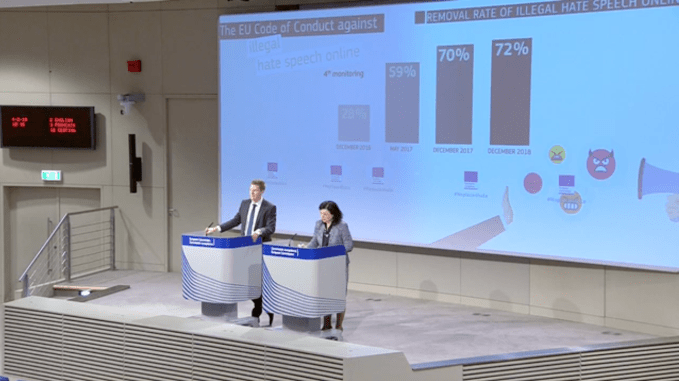

Tech companies are now assessing 89% of flagged content within 24 hours, with 72% of content deemed to be illegal hate speech being removed, according to the Commission — compared to just 40% and 28% respectively when the Code was first launched more than two years ago.

However it said today that platforms still aren’t giving users enough feedback vis-a-vis reports, and has urged more transparency from platforms — pressing for progress “in the coming months”, warning it could still legislate for a pan-EU regulation if it believes it’s necessary.

Giving her assessment of how the (still) voluntary code on hate speech takedowns is operating at a press briefing today, commissioner Vera Jourova said: “The only real gap that remains is transparency and the feedback to users who sent notifications [of hate speech].

“On average about a third of the notifications do not receive a feedback detailing the decision taken. Only Facebook has a very high standard, sending feedback systematically to all users. So we would like to see progress on this in the coming months. Likewise the companies should be more transparent towards the general public about what is happening in their platforms. We would like to see them make more data available about the notices and removals.”

“The fight against illegal hate speech online is not over. And we have no signs that such content has decreased on social media platforms,” she added. “Let me be very clear: The good results of this monitoring exercise don’t mean the companies are off the hook. We will continue to monitor this very closely and we can always consider additional measures if efforts slow down.”

Jourova flagged additional steps taken by the Commission to support the overarching goal of clearing what she dubbed a “sewage of words” off of online platforms, such as facilitating data-sharing between tech companies and police forces to help investigations and prosecutions of hate speech purveyors move forward.

She also noted it continues to provide Member States’ justice ministers with briefings on how the voluntary code is operating, warning again: “We always discuss that we will continue but if it slows down or it stops delivering the results we will consider some kind of regulation.”

Germany passed its own social media hate speech takedown law back in 2016, with the so-called ‘NetzDG’ law coming into force in early 2017. The law provides for fines as high as €50M for companies that fail to remove illegal hate speech within 24 hours and has led to social media platforms like Facebook to plough greater resource into locally sited moderation teams.

While, in the UK, the government announced a plan to legislate around safety and social media last year. Although it has yet to publish a White Paper setting out the detail of its policy plan.

Last week a UK parliamentary committee which has been investigating the impacts of social media and screen use among children recommended the government legislate to place a legal ‘duty of care’ on platforms to protect minors.

The committee also called for platforms to be more transparent, urging them to provide bona fide researchers with access to high quality anonymized data to allow for robust interrogation of social media’s effects on children and other vulnerable users.

Debate about the risks and impacts of social media platforms for children has intensified in the UK in recent weeks, following reports of the suicide of a 14 year old schoolgirl — whose father blamed Instagram for exposing her to posts encouraging self harm, saying he had no doubt content she’d been exposed to on the platform had helped kill her.

During today’s press conference, Jourova was asked whether the Commission intends to extend the Code of Conduct on illegal hate speech to other types of content that’s attracting concern, such as bullying and suicide. But she said the executive body is not intending to expand into such areas.

She said the Commission’s focus remains on addressing content that’s judged illegal under existing European legislation on racism and xenophobia — saying it’s a matter for individual Member States to choose to legislate in additional areas if they feel a need.

“We are following what the Member States are doing because we see… to some extent a fragmented picture of different problems in different countries,” she noted. “We are focusing on what is our obligation to promote the compliance with the European law. Which is the framework decision against racism and xenophobia.

“But we have the group of experts from the Member States, in the so-called Internet forum, where we speak about other crimes or sources of hatred online. And we see the determination on the side of the Member States to take proactive measures against these matters. So we expect that if there is such a worrying trend in some Member State that will address it by means of their national legislation.”

“I will always tell you I don’t like the fragmentation of the legal framework, especially when it comes to digital because we are faced with, more or less, the same problems in all the Member States,” she added. “But it’s true that when you [take a closer look] you see there are specific issues in the Member States, also maybe related with their history or culture, which at some moment the national authorities find necessary to react on by regulation. And the Commission is not hindering this process.

“This is the sovereign decision of the Member States.”

Four more tech platforms joined the voluntary code of conduct on illegal hate speech last year: — namely Google+, Instagram, Snapchat, Dailymotion. While French gaming platform Webedia (jeuxvideo.com) also announced their participation today.

Drilling down into the performance of specific platforms, the Commission’s monitoring exercise found that Facebook assessed hate speech reports in less than 24 hours in 92.6% of the cases and 5.1% in less than 48 hours. The corresponding performance figures for YouTube were 83.8 % and 7.9%; and for Twitter 88.3% and 7.3%, respectively.

While Instagram managed 77.4 % of notifications assessed in less than 24 hours. And Google+, which will in any case closes to consumers this April, managed to assess just 60%.

In terms of removals, the Commission found YouTube removed 85.4% of reported content, Facebook 82.4% and Twitter 43.5% (the latter constituting a slight decrease in performance vs last year). While Google+ removed 80.0% of the content and Instagram 70.6%.

It argues that despite social media platforms removing illegal content “more and more rapidly”, as a result of the code, this has not led to an “over-removal” of content — pointing to variable removal rates as an indication that “the review made by the companies continues to respect freedom of expression”.

“Removal rates varied depending on the severity of hateful content,” the Commission writes. “On average, 85.5% of content calling for murder or violence against specific groups was removed, while content using defamatory words or pictures to name certain groups was removed in 58.5 % of the cases.”

“This suggest that the reviewers assess the content scrupulously and with full regard to protected speech,” it adds.

It is also crediting the code with helping foster partnerships between civil society organisations, national authorities and tech platforms — on key issues such as awareness raising and education activities.

from TechCrunch https://tcrn.ch/2ScBGPD

via IFTTT

No comments:

Post a Comment