Photonic computing startup Lightmatter is taking its big shot at the rapidly growing AI computation market with a hardware-software combo it claims will help the industry level up — and save a lot of electricity to boot.

Lightmatter’s chips basically use optical flow to solve computational processes like matrix vector products. This math is at the heart of a lot of AI work and currently performed by GPUs and TPUs that specialize in it but use traditional silicon gates and transistors.

The issue with those is that we’re approaching the limits of density and therefore speed for a given wattage or size. Advances are still being made but at great cost and pushing the edges of classical physics. The supercomputers that make training models like GPT-4 possible are enormous, consume huge amounts of power and produce a lot of waste heat.

“The biggest companies in the world are hitting an energy power wall and experiencing massive challenges with AI scalability. Traditional chips push the boundaries of what’s possible to cool, and data centers produce increasingly large energy footprints. AI advances will slow significantly unless we deploy a new solution in data centers,” said Lightmatter CEO and founder Nick Harris.

“Some have projected that training a single large language model can take more energy than 100 U.S. homes consume in a year. Additionally, there are estimates that 10%-20% of the world’s total power will go to AI inference by the end of the decade unless new compute paradigms are created.”

Lightmatter, of course, intends to be one of those new paradigms. Its approach is, at least potentially, faster and more efficient, using arrays of microscopic optical waveguides to let the light essentially perform logic operations just by passing through them: a sort of analog-digital hybrid. Since the waveguides are passive, the main power draw is creating the light itself, then reading and handling the output.

One really interesting aspect of this form of optical computing is that you can increase the power of the chip just by using more than one color at once. Blue does one operation while red does another — though in practice it’s more like 800 nanometers wavelength does one, 820 does another. It’s not trivial to do so, of course, but these “virtual chips” can vastly increase the amount of computation done on the array. Twice the colors, twice the power.

Harris started the company based on optical computing work he and his team did at MIT (which is licensing the relevant patents to them) and managed to wrangle an $11 million seed round back in 2018. One investor said then that “this isn’t a science project,” but Harris admitted in 2021 that while they knew “in principle” the tech should work, there was a hell of a lot to do to make it operational. Fortunately, he was telling me that in the context of investors dropping a further $80 million on the company.

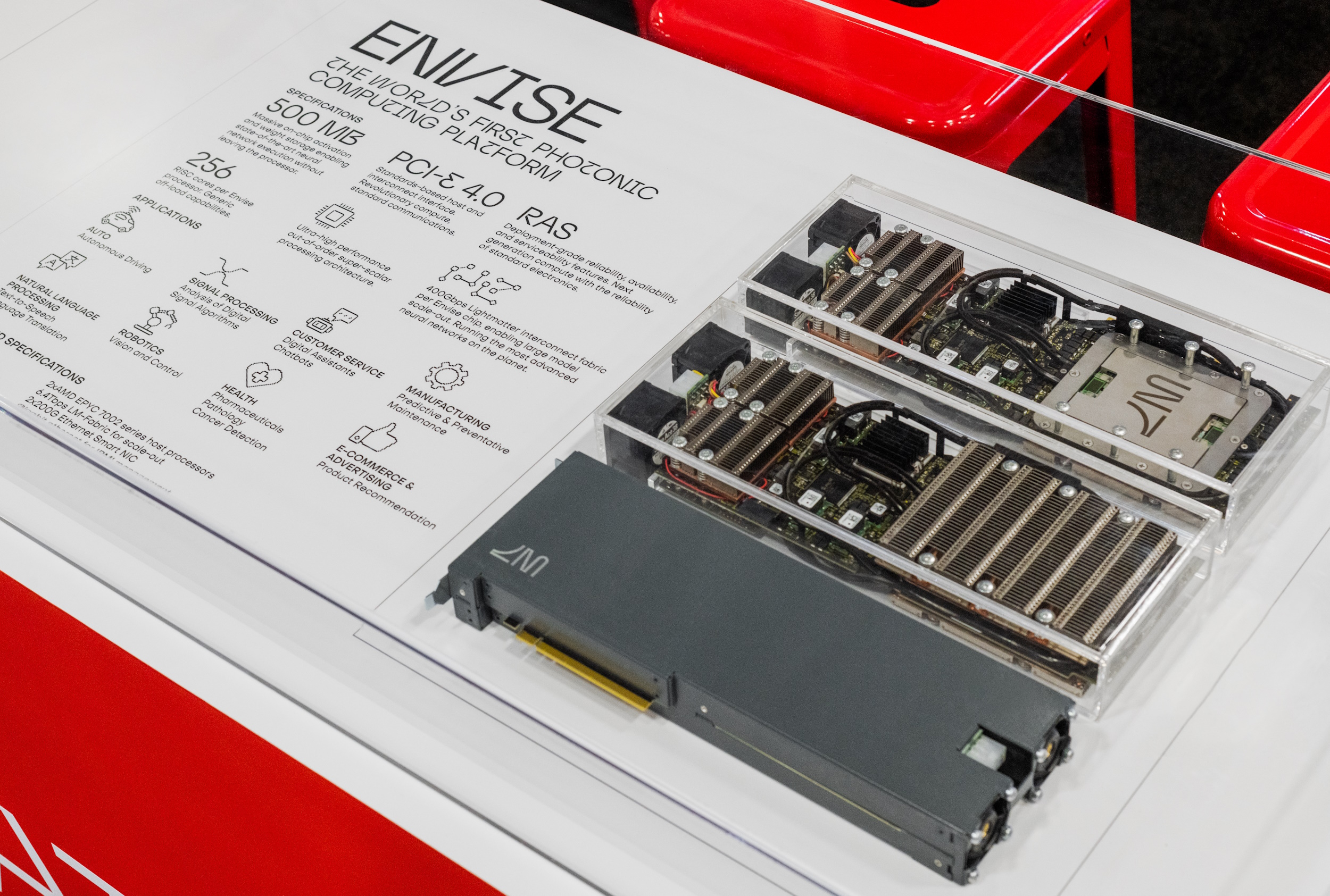

Now Lightmatter has raised a $154 million C round and is preparing for its actual debut. It has several pilots going with its full stack of Envise (computing hardware), Passage (interconnect, crucial for large computing operations) and Idiom, a software platform that Harris says should let machine learning developers adapt quickly.

A Lightmatter Envise unit in captivity. Image Credits: Lightmatter

“We’ve built a software stack that integrates seamlessly with PyTorch and TensorFlow. The workflow for machine learning developers is the same from there — we take the neural networks built in these industry standard applications and import our libraries, so all the code runs on Envise,” he explained.

The company declined to make any specific claims about speedups or efficiency improvements, and because it’s a different architecture and computing method it’s hard to make apples-to-apples comparisons. But we’re definitely talking along the lines of an order of magnitude, not a measly 10% or 15%. Interconnect is similarly upgraded, since it’s useless to have that level of processing isolated on one board.

Of course, this is not the kind of general-purpose chip that you could use in your laptop; it’s highly specific to this task. But it’s the lack of task specificity at this scale that seems to be holding back AI development — though “holding back” is the wrong term since it’s moving at great speed. But that development is hugely costly and unwieldy.

The pilots are in beta, and mass production is planned for 2024, at which point presumably they ought to have enough feedback and maturity to deploy in data centers.

The funding for this round came from SIP Global, Fidelity Management & Research Company, Viking Global Investors, GV, HPE Pathfinder and existing investors.

Lightmatter’s photonic AI hardware is ready to shine with $154M in new funding by Devin Coldewey originally published on TechCrunch

from TechCrunch https://ift.tt/s7Wajux

via IFTTT

Comments

Post a Comment