A few years back, Occipital released a sensor that turned your iPad into a portable 3D scanner. Called the Occipital Structure, it packed lasers and cameras into a snap-on package that let the iPad be used for anything from accurately measuring a room’s dimensions to building 3D models for building prosthetic limbs. The catch? For the most part, it only worked with iOS.

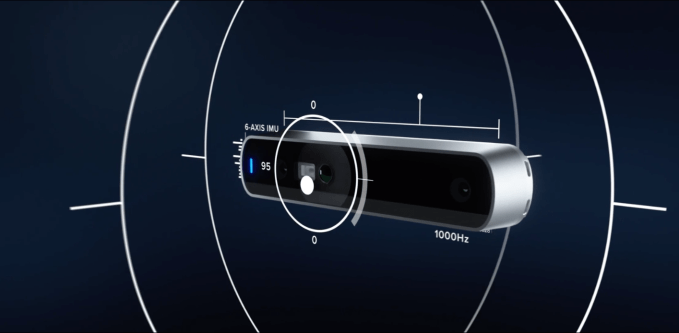

Today Occipital is announcing a more flexible (and powerful) alternative: Structure Core. With built-in motion sensors and compatibility with Windows, Linux, Android or macOS, it’s meant for projects where cramming in an iPad just doesn’t make sense. Think robots, or mixed reality headsets.

By blasting out an array of laser dots and using the Core’s onboard cameras to map them, Structure’s SDK is able to map its environment and determine its position within it. You could, for example, use the depth sensors to have your robot build a map of a room, then use the SDK’s built-in route tool to get it from point A to point B on command (without bashing into everything along the way).

Beyond no longer being tied to iOS, Structure Core also beefs things up under the hood. Whereas the original Structure sensor uses USB 2.0/Lightning, Structure Core taps USB 3.0 — which, the company tells me, means the SDK can pull considerably more sensor data, faster. They’ve switched from a rolling shutter to global shutter (meaning every pixel on the sensor is exposed at the same time, helping to stop tearing/distortion on fast moving objects), and the field of view has been greatly improved.

Occipital isn’t the first to dabble in this space, of course. DIY’ers have been repurposing Microsoft’s Kinect hardware (RIP) to give their robots basic vision abilities for years; meanwhile, Intel has an arm it calls RealSense focusing on drop-in vision boards. But with companies like Misty Robotics turning to Occipital to give its robots sight, it made sense to take the iPad out of the equation and offer something that could stand on its own.

Structure Core will come in two forms: enclosed, or bare. The first wraps the chipset/sensors/etc. in aluminum in a way that’s ready to be strapped right into a project; the second sheds the enclosure and gives you on-board mounting points for when you’re looking for something more custom.

Pricing is a bit peculiar for a piece of hardware — the sooner you need it, the more it’ll cost. A limited run of early units will ship in the next few weeks for $600 each; the next batch goes out in January, for $499. By March, they expect the price to officially settle at $399 each. The company also tells me that they’re open to volume pricing if a team needs a bunch of units, though those prices aren’t available yet.

from TechCrunch https://ift.tt/2AySlRH

via IFTTT

Comments

Post a Comment