Google today announced that Nvidia’s high-powered Tesla V100 GPUs are now available for workloads on both Compute Engine and Kubernetes Engine. For now, this is only a public beta, but for those who need Google’s full support for their GPU workloads, the company also today announced that the slightly less powerful Nvidia P100 GPUs are now out of beta and publicly available.

The V100 GPUs remain the most powerful chips in Nvidia’s lineup for high-performance computing. They have been around for quite a while now and Google is actually a bit late to the game here. AWS and IBM already offer V100s to their customers; they are currently in private preview on Azure.

While Google stresses that it also uses NVLink, Nvidia’s fast interconnect for multi-GPU processing, it’s worth noting that its competitors do this, too. NVLink promises GPU-to-GPU bandwidth that’s nine times faster than traditional PCIe connections, resulting in a performance boost of up to 40 percent for some workloads, according to Google.

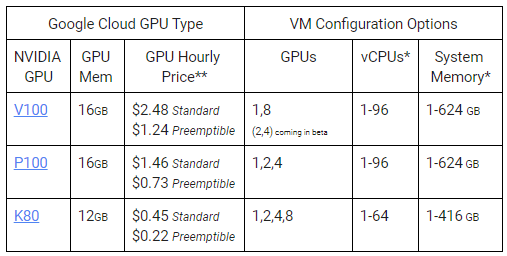

All of that power comes at a price, of course. An hour of V100 usage costs $2.48, while the P100 will set you back $1.46 per hour (these are the standard prices, with the preemptible machines coming in at half that price). In addition, you’ll also need to pay Google to run your regular virtual machine or containers.

All of that power comes at a price, of course. An hour of V100 usage costs $2.48, while the P100 will set you back $1.46 per hour (these are the standard prices, with the preemptible machines coming in at half that price). In addition, you’ll also need to pay Google to run your regular virtual machine or containers.

V100 machines are now available in two configurations with either one or eight GPUs, with support for two or four attached GPUs coming in the future. The P100 machines come with one, two or four attached GPUs.

from TechCrunch https://ift.tt/2HGBsH4

via IFTTT

Comments

Post a Comment