Japan’s ambitious second asteroid return mission, Hayabusa 2, has collected a wealth of material from its destination, Ryugu, which astronomers and other interested parties are almost certainly champing at the bit to play with. Though they may look like ordinary bits of charcoal, they’re genuine asteroid surface material — and a little something shiny, too.

Hayabusa 2 was launched in 2014, and arrived at the asteroid named Ryugu in 2018, at which point it deployed a couple landers to test surface conditions. It touched down itself in the next year, blasting the surface with a space gun so that it could collect not just the surface gravel but what might lie beneath it. After a long trip home it reentered the atmosphere on December 5 and was collected in the Australian desert.

Although everything worked perfectly, the team could never really be sure they would truly get the samples they hoped for until they opened the sample collection containers in a sealed room back at headquarters. The materials inside have been teased in a few tweets, but today JAXA posted all of the public images along with some new explanations and discoveries.

For one thing, the “sample catcher” itself had grains of sediment from Ryugu. Perhaps this material, exposed to different conditions than that of the containers, will prove different when analyzed.

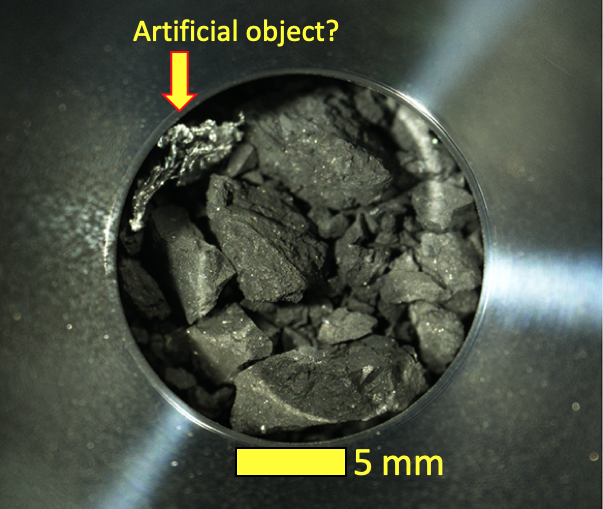

For another, sample container C appears to have an “artificial object” in it! But don’t get excited — as the team writes on their blog, “the origin is under investigation, but a probable source is aluminium scraped off the spacecraft sampler horn as the projectile was fired to stir up material during touchdown.”

In other words, it’s probably a bit of the probe that came off during the not-so-gentle process of shooting the asteroid and crashing into it.

But the most important bit is all the rocks collected as planned. As you can see by the scale bar, these are little more than pebbles, but they’re large enough to show evidence of all kinds of processes leading to their particular shape and makeup. There’s also plenty of smaller-scale dirt and dust from below the surface that scientists hope could show signs of organic materials and water, the building blocks of life as we know it.

The success of the mission is worth celebrating, and the team has only just begun studying the materials brought back from Ryugu — so we can expect more information soon as they perform the painstaking work of analysis on these priceless samples. The Hayabusa 2 Twitter account is probably the best way to stay up to date day to day.

from TechCrunch https://ift.tt/3hqKr1v

via IFTTT

Comments

Post a Comment