Filmic had a solid cameo at the iPhone launch event in Cupertino last September. Such an appearance is always a vote of confidence from Apple. In this particular case, the company was most interested in the ways in which the pro-focused camera app maker was planning to harness the iPhone 11 Pro’s triple-camera setup.

The feature arrives on the App Store today in the form of DoubleTake. It’s launching as an iOS exclusive tailored specifically to the imaging capabilities of the 11, 11 Pro and 11 Pro Max. In fact, it will only work on those devices specifically, owing to the multi-camera capabilities.

Pros continue to be a primary focus for the company — as evidenced by the presentation back in September. Over at the developer’s blog, you can find a wide range of works shot using the company’s Pro app, ranging from short films to music videos. With DoubleTake, the company’s broadening its capabilities by allowing shooters to grab multiple focal lengths at once with the different cameras.

The most visually compelling use here, however, is Shot/Reverse Shot, which takes video from both the rear-facing and front-facing cameras at once. Obviously there’s going to be a gulf in image quality between the front and back, but the ability to do both simultaneously opens up some pretty fascinating possibilities.

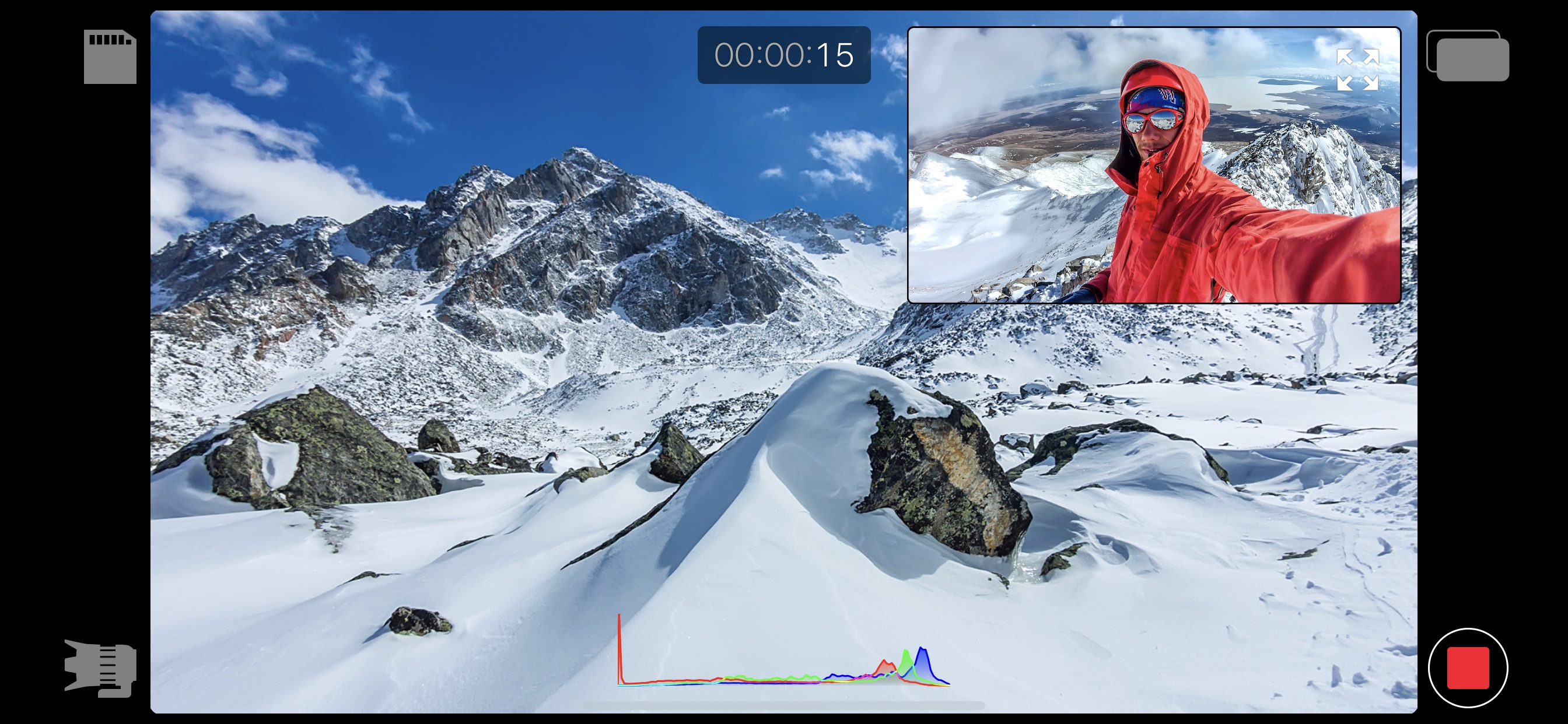

In a press release, Filmic points to the ability to shoot two actors in conversation — eliminating the need for multiple takes. That’s certainly interesting, as far as getting genuine, organic reactions, but I think what’s most promising here is what is opened up beyond such scripted takes. You can, say, shoot a two-way podcast conversation by putting an iPhone in the middle and using the Split-Screen mode.

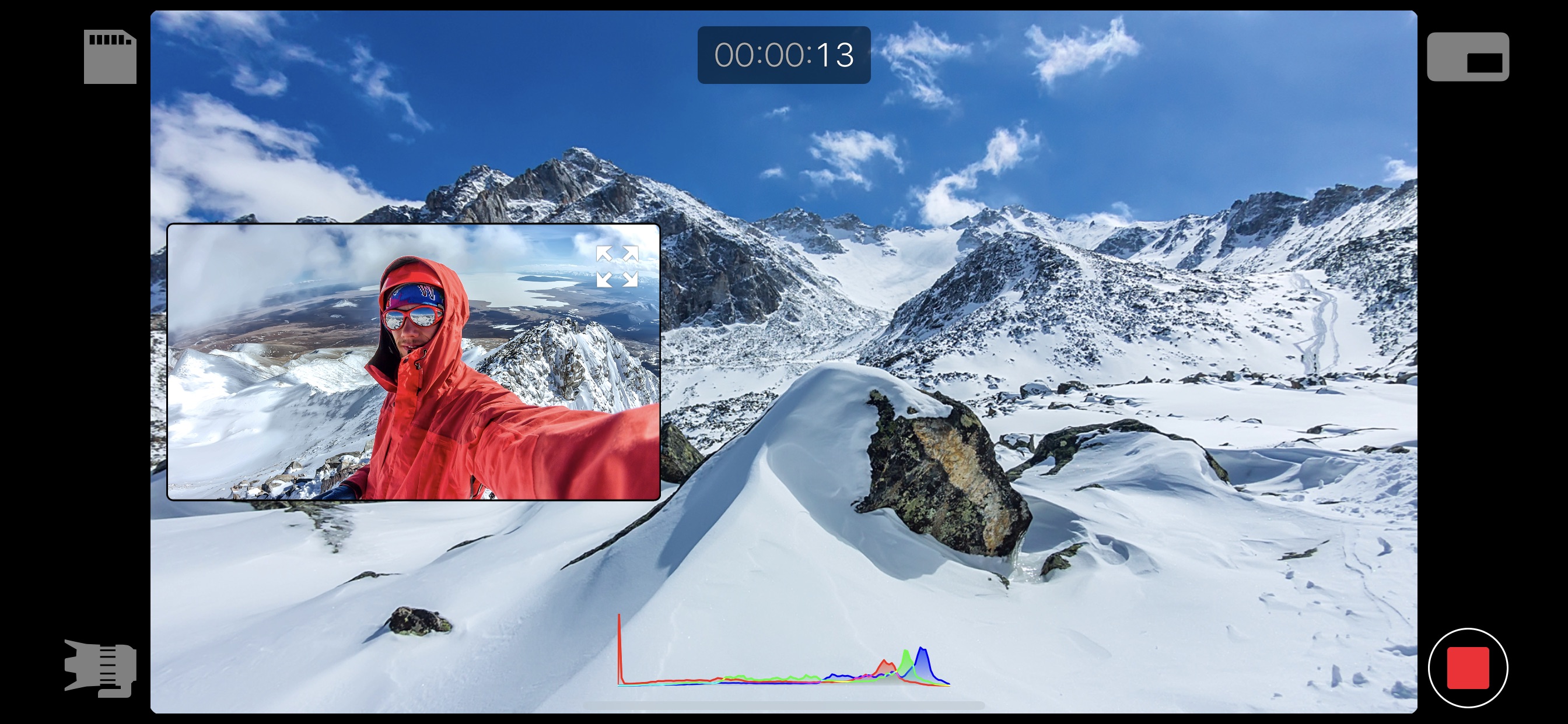

Or there’s the Picture (PiP) window, which opens up some dynamic possibilities for webloggers, allowing them to insert themselves into what they’re shooting on the fly. For newer filmmakers not beholden to more traditional aesthetic constraints, it’s easy to see the lines blurring between these formats. Shooting on a mobile device opens up some tremendous possibilities.

In the case of something like PiP, that editing as actually happening on the fly, in real-time. You can always opt to do all of that in post-production, but there is, perhaps, something to be said for the sort of decision making that happenings with that sort of live editing — it’s kind of akin to a live TV multi camera switcher. I suspect broadcast journalists looking to pare down equipment to the bare mobile minimum will find something to like from that perspective.

DoubleTake is available starting today as a free download.

from TechCrunch https://ift.tt/2O2mj7V

via IFTTT

Comments

Post a Comment