Machine learning is everywhere these days, but it’s usually more or less invisible: it sits in the background, optimizing audio or picking out faces in images. But this new system is not only visible, but physical: it performs AI-type analysis not by crunching numbers, but by bending light. It’s weird and unique, but counter-intuitively, it’s an excellent demonstration of how deceptively simple these “artificial intelligence” systems are.

Machine learning systems, which we frequently refer to as a form of artificial intelligence, at their heart are just a series of calculations made on a set of data, each building on the last or feeding back into a loop. The calculations themselves aren’t particularly complex — though they aren’t the kind of math you’d want to do with a pen and paper. Ultimately all that simple math produces a probability that the data going in is a match for various patterns it has “learned” to recognize.

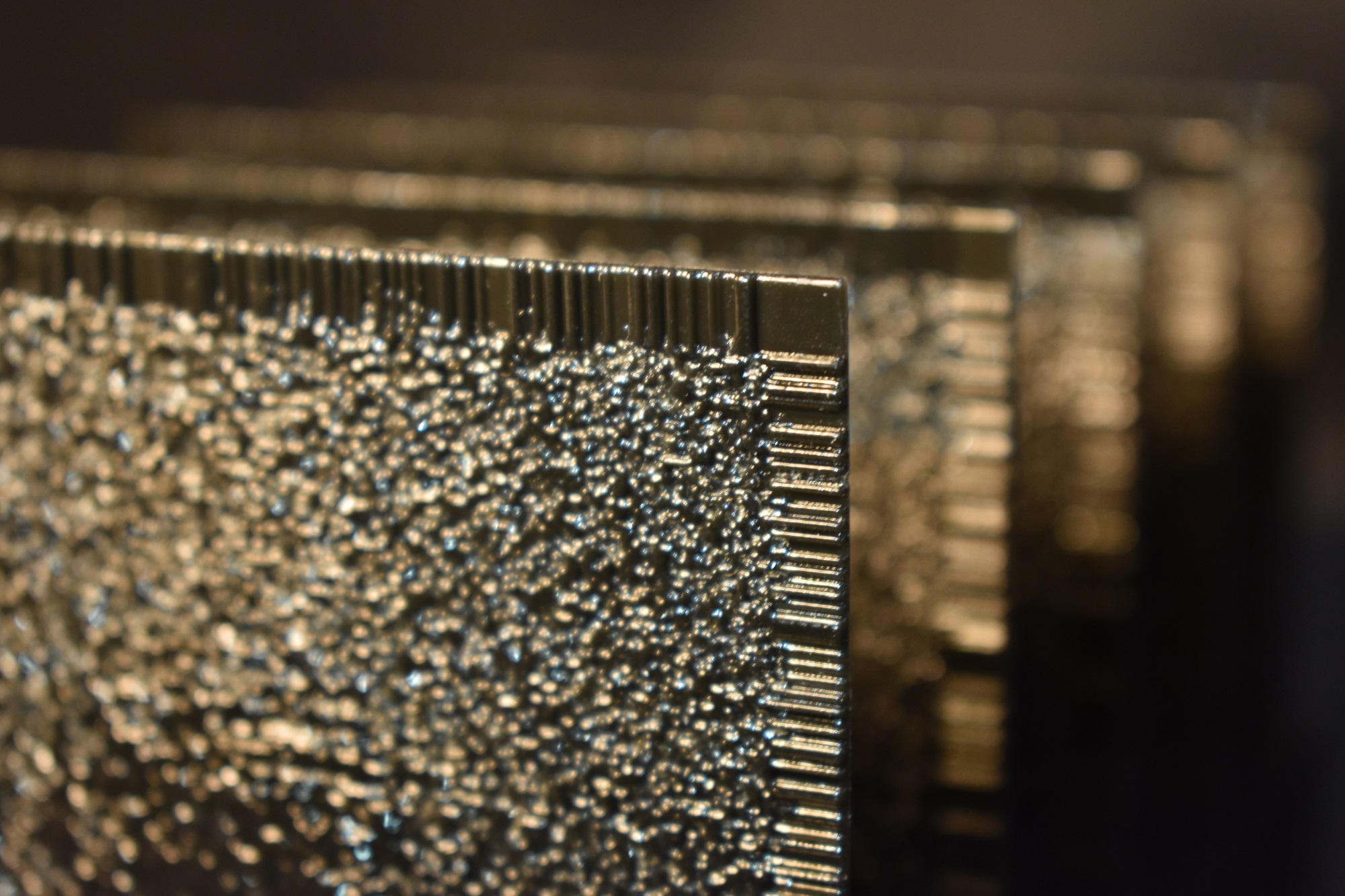

The thing is, though, that once these “layers” have been “trained” and the math finalized, in many ways it’s performing the same calculations over and over again. Usually that just means it can be optimized and won’t take up that much space or CPU power. But researchers from UCLA show that it can literally be solidified, the layers themselves actual 3D-printed layers of transparent material, imprinted with complex diffraction patterns that do to light going through them what the math would have done to numbers.

If that’s a bit much to wrap your head around, think of a mechanical calculator. Nowadays it’s all done digitally in computer logic, but back in the day calculators used actual mechanical pieces moving around — something adding up to 10 would literally cause some piece to move to a new position. In a way this “diffractive deep neural network” is a lot like that: it uses and manipulates physical representations of numbers rather than electronic ones.

If that’s a bit much to wrap your head around, think of a mechanical calculator. Nowadays it’s all done digitally in computer logic, but back in the day calculators used actual mechanical pieces moving around — something adding up to 10 would literally cause some piece to move to a new position. In a way this “diffractive deep neural network” is a lot like that: it uses and manipulates physical representations of numbers rather than electronic ones.

As the researchers put it:

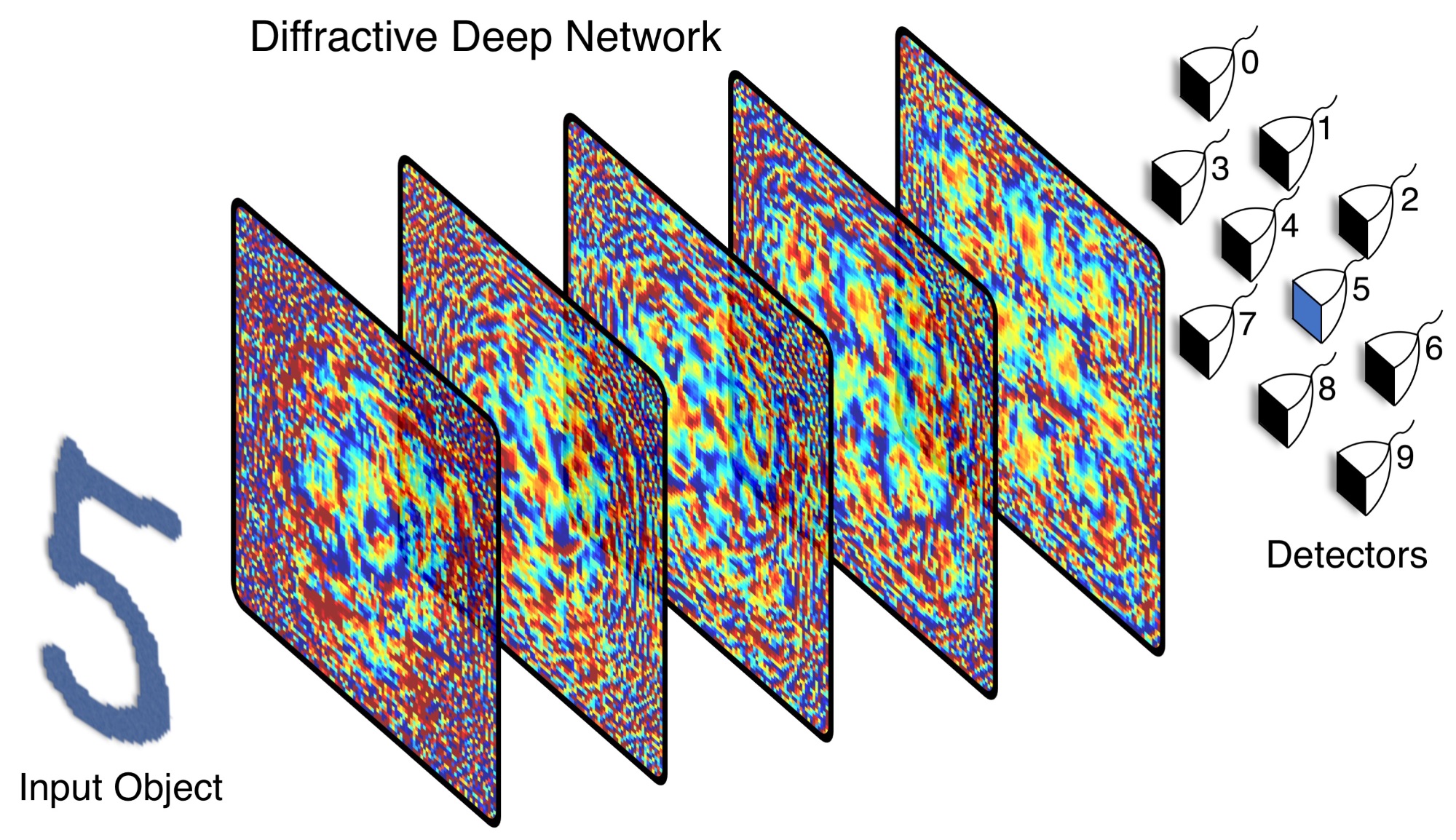

Each point on a given layer either transmits or reflects an incoming wave, which represents an artificial neuron that is connected to other neurons of the following layers through optical diffraction. By altering the phase and amplitude, each “neuron” is tunable.

“Our all-optical deep learning framework can perform, at the speed of light, various complex functions that computer-based neural networks can implement,” write the researchers in the paper describing their system, published today in Science.

To demonstrate it they trained a deep learning model to recognize handwritten numerals. Once it was final, they took the layers of matrix math and converted it into a series of optical transformations. For example, a layer might add values together by refocusing the light from both onto a single area of the next layer — the real calculations are much more complex, but hopefully you get the idea.

By arranging millions of these tiny transformations on the printed plates, the light that enters one end comes out the other structured in such a way that the system can tell whether it’s a 1, 2, 3 and so on with better than 90 percent accuracy.

By arranging millions of these tiny transformations on the printed plates, the light that enters one end comes out the other structured in such a way that the system can tell whether it’s a 1, 2, 3 and so on with better than 90 percent accuracy.

What use is that, you ask? Well, none in its current form. But neural networks are extremely flexible tools, and it would be perfectly possible to have a system recognize letters instead of numbers, making an optical character recognition system work totally in hardware with almost no power or calculation required. And why not basic face or figure recognition, no CPU necessary? How useful would that be to have in your camera?

The real limitations here are manufacturing ones: it’s difficult to create the diffractive plates with the level of precision required to perform some of the more demanding processing. After all, if you need to calculate something to the seventh decimal place, but the printed version is only accurate to the third, you’re going to run into trouble.

This is only a proof of concept — there’s no dire need for giant number-recognition machines — but it’s a fascinating one. The idea could prove to be influential in camera and machine learning technology — structuring light and data in the physical world rather than the digital one. It may feel like it’s going backwards, but perhaps the pendulum is simply swinging back the other direction.

from TechCrunch https://ift.tt/2mLRqGS

via IFTTT

No comments:

Post a Comment